21 Reliability Considerations in Medical Device Development

Mark Hjelle

Preparing for the Reliability Challenge

In translating an early-stage medical device designs from tens to hundreds, thousands and perhaps to millions of units, your product development team has many goals provided to them by investors and internal stakeholders including: reduced unit costs, demonstrated clinical efficacy, the earliest possible forecast for revenue, high production yields and other significant quality and business related measures. A common medical product validation threshold is a 95% confidence of a 95% device reliability. Given that validation is the common gateway to clinical studies, eventual market release and revenue, there is a high level of focus on validation and reliability of the prototypes to be tested on the benchtop, in pre-clinical trials and in human trials. The following table shows the number of device tests that are needed to reach a specific level of reliability with a specific level of confidence.

| % Confidence | ||||

| % Reliability | 90 | 95 | 99 | 99.9 |

| 99.9 | 2,302 | 2,995 | 4,603 | 6,905 |

| 99 | 114 | 149 | 228 | 688 |

| 95 | 45 | 59 | 90 | 135 |

| 90 | 22 | 29 | 44 | 66 |

You will notice that there are no values provided in the table for 100% confidence or 100% reliability, and you will notice that the sample sizes needed for 99% intervals and above are generally cost prohibitive. While one can understand that no product can be 100% reliable, to the patient who receives your device that is the reality. For that patient there is no 90%. The device either works or does not. Further, any failure of a Class III medical device could lead to morbidity or mortality. So for the development team a significant and ongoing challenge is device reliability.

The consequences of missing the target on reliability can impact the overall project life or the viability of a whole product line. In other words from a business standpoint, missing reliability goals can result in: 1) missed timelines, 2) delayed clinical start, 3) missed revenue targets prior to market release and potential quality issues in production, 4) reliability issues in the field, 5) warning letters, or 6) field corrective actions.

Addressing the Unknown to Find the Sweet Spot

Reliability is a difficult concept to measure and understand because of the time element that drives this topic. In addition, medical device reliability has a historical connotation that large sample sizes and lengthy testing is required to derive the desired results. In the future, with the advancement of computational modeling and rapid prototyping techniques and services, the development of reliability measures for a new product will be more realistically achieved.

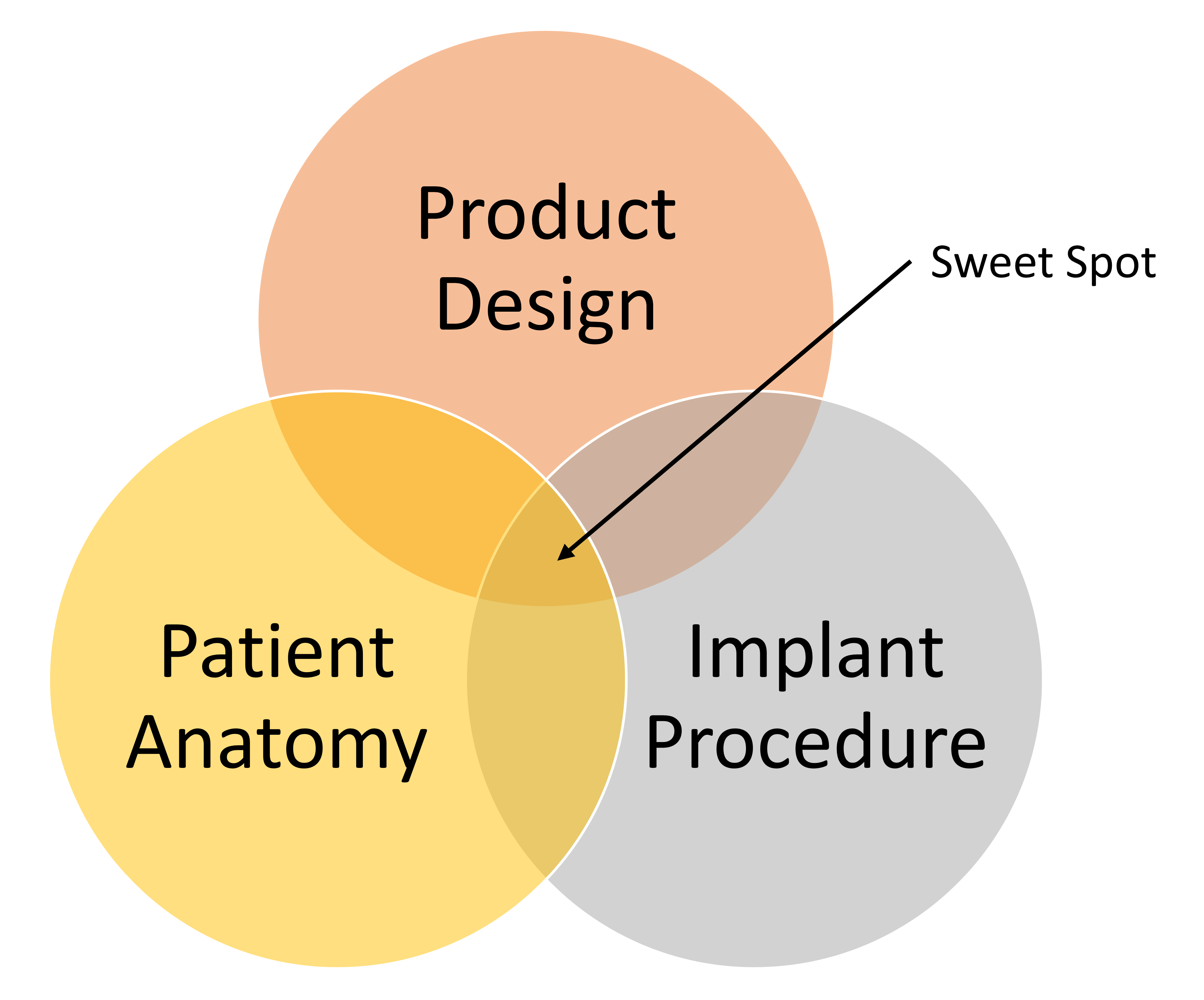

The concept of reliability for medical devices is typically much more than just the relative reliability of an individual product. For example, if the product is an ICD lead, factored reliability at a minimum is influenced by: 1) the lead itself; 2) the implant system, including the ICD, the programmer, software, atrial lead and CRT lead, implant accessories (stylets, introducers) and catheters; 3) the implant procedure including lateral or medial axillary stick, cephalic cutdown, etc; 4) the skills and product knowledge of the implanting physician; and 5) the variability in a given patient’s anatomy (both static and dynamic including high impact activities).

There are items within the control of the product development team as well as reliability issues that are beyond the control of the team. Yet, this should not be considered as a barrier to the team but an opportunity to provide a better device and clinical result for the patient to address the disease state. In the end, this is the desired result for all. Diligent planning and execution of the team during the prototyping and bench testing phases are vital to gain the data and experiences required to understand reliability. These insights will be essential to train and educate the clinical team as to optimal utilization of the medical device/system.

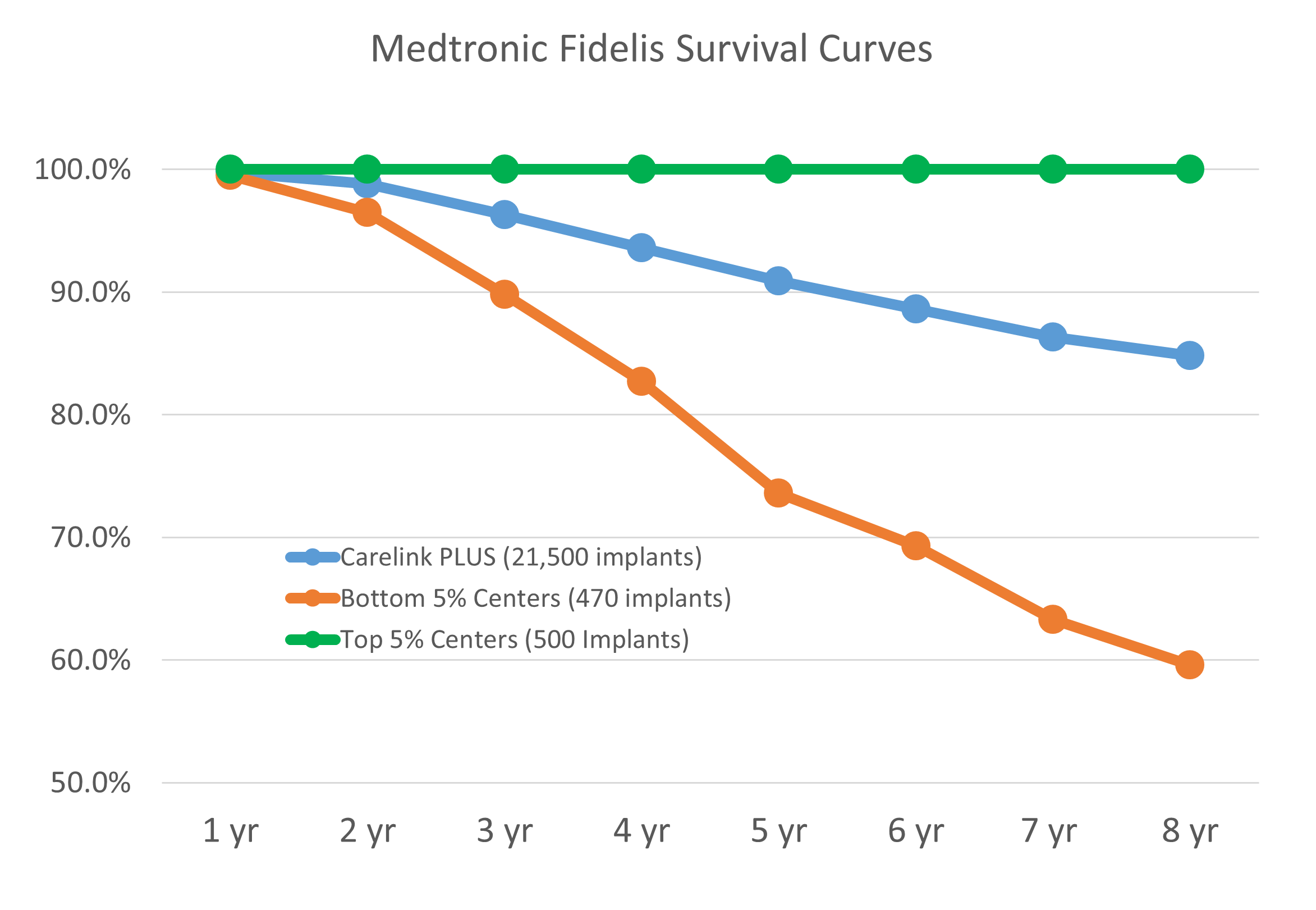

The figure above shows available public data from the Medtronic Fidelis performance registry that highlights the challenge and the opportunity for a team seeking to develop an innovative medical device.

The key point of this plotted data, is that the same device yielded clinical survival results in one group of implanters of 100% clinical survival at eight years while another group of implanters had ~60% clinical survival at eight years with similar sample sizes in each group.

For each device project team, identification and confirmation of the “sweet spot” (shown in the figure below) is the goal that drives the union of innovation and reliability, that is required to address the unmet needs within the medical device industry. In the Fidelis example the “sweet spot” was missed.

The consequences derived from the history of medical device industry failures has been increased scrutiny by regulatory bodies who have required additional, rigorous bench and clinical testing in an effort to prevent future issues. These new requirements are not only for the company that had device reliability issue, but for all of the players in a given field. This in turn can result in a slowing of innovation within a clinical field by: 1) increasing fears of failure; 2) more time and resources dedicated for enhanced testing; and 3) increased documentation to complete new deliverables. All of which means that next generation devices will take longer to reach the market.

The “sweet spot” is not impossible to achieve as evidenced by numerous examples of products that have found the right reliability and clinical performance, among them being the Medtronic 5076 pacing lead, the St. Jude Tendril pacing lead and the Edwards Sapiens transcatheter heart valve.

Design and Process Bench Testing Phase to Define Reliability

Once a general framework for the device design is chosen and realized through early prototypes, the next phase can begin with the actual materials and components that will produce the final product. At this point, breaking down the product design as an overall system that is further divided into sub-systems to understand the functions and interfaces of each sub-system is recommended. For example, ICD leads have the following sub-systems or building blocks:

- Proximal End: connectors, handles and transition management to the lead body.

- Lead Body or Mid-Section: wire, conductor and lumen management from proximal to distal end.

- Distal Section: electrodes, therapy delivery, flex and shape formation, transition from the lead body.

Once the building blocks are developed then the task of component testing begins with the use of smart design of experiments (DOE). The smart DOE approach consists of small focused testing to failure, testing in and out of specification experiments that define both the nominal, edge and beyond the edge of specification data: i.e., which will confirm the selected designs for each building block. Key components of a smart DOE plan are:

- Smaller focused sample sizes: a sequential run of three sets of 10 samples versus one set of 30 samples.

- Sample Selection: DOE software is a useful tool, but all too often the software generates an experiment requiring hundreds of samples. It is acceptable to skip specific samples or reduce the number of samples with thoughtful team input.

- Test to failure: running an experiment with no failures may reveal a poor test set up versus the desired test success: NOTE, do not get lured into a false sense of security with all your tests passing. Highly Accelerated Life Testing (HALT) is a common form of “Test to Failure”. The goal of HALT testing is to proactively find weakness in a design thru test to failure. “Fail Fast and Fail Smart” is a common descriptor of this philosophy.

- Testing assemblies built wrong: For example if an assembly requires two mechanical crimps, build samples with one crimp. If the samples built wrong with one crimp pass then the desired two crimps assembly will show that you have both design and process margins.

While testing is being conducted it is important to document any failure modes, for example, document a cohesive versus adhesive bond failure. Knowing how a sample failed is of equal importance as to the recorded value of the failure. To optimize reliability testing, one must have a good understanding of statistics. While there tends to be a fixation on normal data, the world in general is not normal. For example, most joining techniques produce Weibull results so all data distributions should be considered. Consideration should be given to the process capability by measuring or estimating the process capability index (CpK). Typically, while a high process CpK is considered a good result, it is not the only goal because process capability does not equal reliability. For example, the Takata air bags likely had a very high CpK in manufacturing as is typical for products made by Japanese companies, yet the reliability of the air bag was poor and resulted in injuries and fatalities.

Once quantitative data is obtained, then an effort to develop final computational models of the product is typical next step. The resulting computational models, if properly linked to bench testing, can enable iterative designs (in some cases) to be submitted for regulatory approval without excessive bench testing. Monte Carlo simulations are a useful method to leverage data and project future reliability of the design.

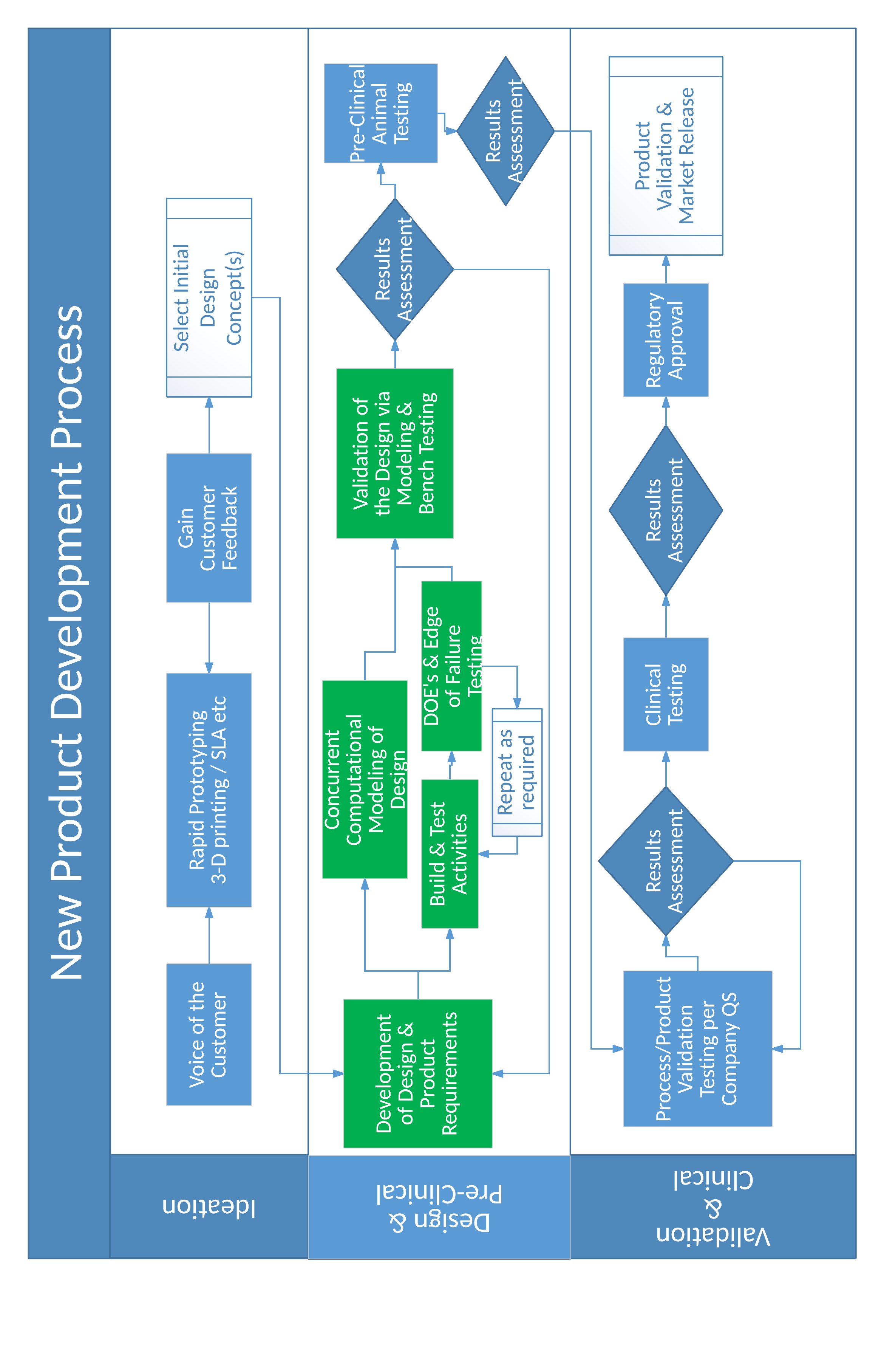

Product Development Process to Deliver Reliability

In general, a good product development process is designed to burn down risks and to manage the risks associated with turning the initial prototypes into full scale production. Again, a focus on reliability can be the difference. A practical product development process can be represented with the following flow diagram. Items highlighted in green are areas where the focus on device reliability are key outcomes of the activity. For acute products, the ideation and the design and pre-clinical phases typically take 3–6 months, while for chronic products, the ideation phase may take 6–9 months or longer and the design and pre-clinical phase for chronic devices may take 12-18 months. The exact timing for each phase cannot be guaranteed up front given the variation in types of medical devices.

When looking at successes and failures in throughout the history of the medical device industry three things stand out:

- The innovation and development of medical devices has brought life changing results to millions of people.

- Companies and projects start with noble intents: failure was not in their plans.

- The products and the innovations that have stood the test of time are reliable in their performance and in their ability to address the unmet clinical needs.

There is an old commercial for an oil filter that simply states the importance of recognizing the importance to finding the reliability sweet spot. The message was delivered by a mechanic to people who stretched out the intervals between maintenance in order to save money. The mechanic said, “You can pay me now, or you can pay me later.” The up front planning and investment in efforts to reduce risks and develop a reliable product are vital to the success of the overall project and in some cases possibly the long term success of the company.