Visual Development and Object Recognition

126 Latent Variables

Learning Objectives

Understand what a latent variable is.

Understand what conditioning is.

The latent variable is the unobserved variable that, if you knew its value, would allow you to explain the data that you can actually observe.

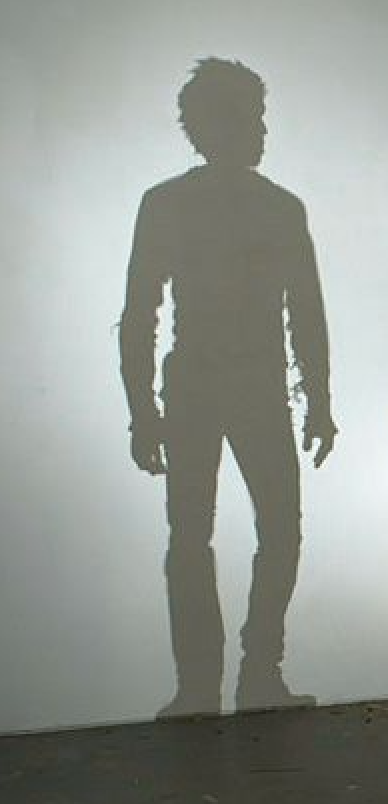

Let’s say for example that you are sitting in a chair facing a blank wall. Behind you is some object and you’re asked to infer what it is. Sometimes a light behind that object is turned on, creating a shadow on the wall that you can see. The latent variable in this case is the object you can’t see, but wish to know what it is. When there is no shadow, all you can do is wildly guess. But even with no shadow, your guess is somewhat informed by your understanding of the world. You might guess that it is an apple before you guessed that it’s a snow leopard, since apples are pretty common and snow leopards are rare. This is the prior: how likely any potential latent variable is to occur in general.

Conditioning is when you are given a shadow caused by the latent object. So, what is the object behind you, given this shadow?

Now this significantly reduces your search space. You see that the shadow is shaped like a human, so that more or less rules out a lot of things, like the snow leopard. But it doesn’t rule out everything else. It could actually be a pile of trash that is placed in such a way that it makes a shadow that’s in the general shape of a human.

This is where likelihood comes in. In this example, likelihood would be how likely the particular shadow you are seeing is caused by a given latent variable (i.e. if the latent variable were a human, how likely would it be that you would observe this particular shadow?). Humans are likely to experience such a pattern, piles of trash are not. This is why the prior and the likelihood are both needed. Maybe the prior for trash is high (there are probably more piles of trash in the world than there are humans), but piles of trash are not likely to create the shadow that you are seeing. These two things combined give you the posterior.

If you were given more samples (for example, if the object was rotated to cause a new shadow), you might have to update your posterior. Maybe when the object is rotated, the shadow becomes formless, in which case the posterior for pile of trash suddenly increases significantly. But maybe the shadow looks like the profile of a human. Human is still a good explanation of the shadow.

The brain’s impossible job is to infer what is out in the world (latent variable) using only patterns of photons hitting the retina. In the case of a hierarchical bayesian model of vision in the brain, this sort of conditional inference is repeated at every level of the brain, with the posterior at one level acting as the input/evidence to the one above it. And so in this case the latent variable takes on the form of different visual features that cause other visual features at different levels of abstractness. That is, V1 is inferring the latent cause of the activity in LGN (perhaps certain edges caused that pattern in LGN), V2 is inferring the latent cause of the edges encoded in V1 (perhaps a certain shape caused those edges encoded in V1), and so on all the way up to object category or beyond. Each area is describing the latent variable that would explain the pattern of activity that it is receiving from the area below it and it does this describing in terms of the types of features that it encodes.

A generative model of imagery is reversing this direction of inference. It would be like starting with knowing what the object behind you was and inferring what kind of shadow it would make.

Still unclear on what a prior, posterior, and conditioning means?

Check “The Monty Hall Problem” out!

Picture yourself at the threshold of three doors, behind one of which lies a brand new car, and behind the others, mere goats. Your first pick carries a hopeful 1/3 chance—a prior bet on where the prize might be. The plot thickens as the host reveals a goat behind one of the doors you didn’t pick, nudging you to reconsider your choice. This moment of revelation is where conditioning shines, transforming your initial guess based on fresh insight. Suddenly, the door left unchosen blooms with possibility, its chance of concealing the car soaring to 2/3—a posterior probability updated by the new information.

This intuitive, real-world example clarifies how prior beliefs are updated in the light of new evidence, a foundational principle in understanding latent variables and the process of conditioning.

There is also a youtube video that can help you easier understand the whole concept.